Azure Machine Learning–experimenting with training data proportions using the SMOTE module

A short while ago, I was trying to classify some data using Azure Machine Learning, but the training data was very imbalanced. In the attempt to build a useful model from this data, I came across the Synthetic Minority Oversampling Technique (SMOTE), an approach to dealing with imbalanced training data.

This blog describes what I learnt. First, I'll step back and give a quick intro to Azure Machine Learning service, and its use of training data. Then I'll give an overview of SMOTE and Azure Machine Learning's SMOTE functionality. Next, I'll talk about why you might want to use this functionality. Finally, I'll describe how I've used it with a multi-class classification problem.

Azure Machine Learning and training data

Machine learning addresses the problem of working out something unknown about an entity, based on things that are known about it. This can be described as 'classification' or 'prediction'. The unknown property or class is predicted using a model which is (usually) built using an algorithm and a set of training data.

The training data consists of data about a set of known entities of the type you want to classify, including the value of the property you want the trained model to predict. For example, if you were building a predictive model to help you prevent customer churn, the training data would consist of a set of customer records combining any available information about the customer (for example, demographic and service-use information), along with a property indicating whether or not the customer had left.

Before being used to train a model, training data is piped through a series of pre-processing operations. Once it's ready, you use it to create models generated by a variety of algorithms, and compare the success of each algorithm, by checking the predicted value against the known value.

Azure Machine Learning is a cloud application which provides a GUI for building machine learning models, and lets you expose these models as web service endpoints. The web service endpoints may be accessed directly by an application which needs to get predictions on the fly, or they might form part of a larger data processing operation such as an Azure Data Factory pipeline.

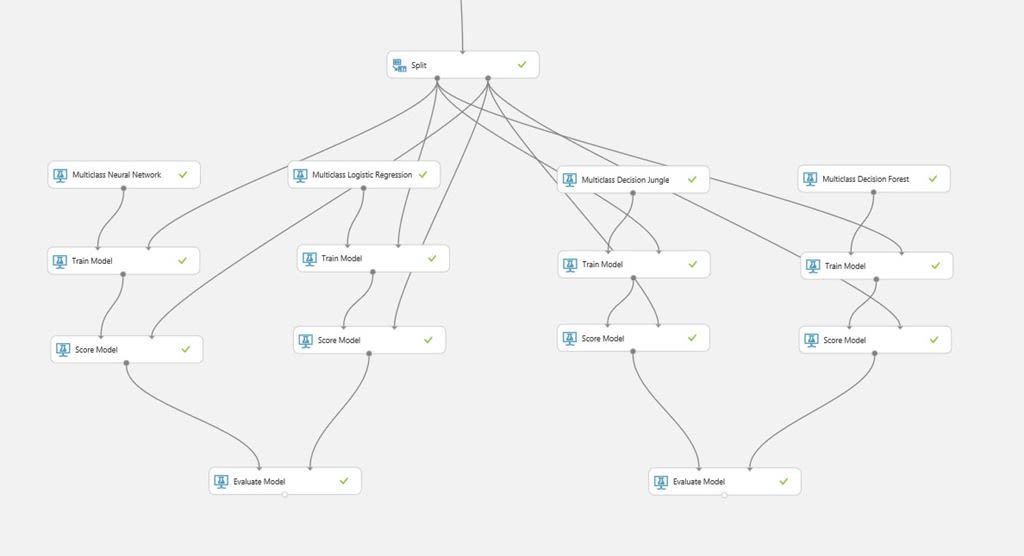

Within Azure Machine Learning, pre-processing, training and evaluation are carried out using 'modules', ready-made pieces of functionality which are visually represented as blocks that can be dragged onto a canvas, connected together and re-arranged like a Visio diagram.

Modules in an Azure Machine Learning experiment canvas

If you need more flexibility, you can use custom modules that run your own R or Python scripts (more R functionality will become available over the next year following Microsoft's acquisition of Revolution Analytics).

The service is very easy to use, and opens up machine learning to a wide range of people (like myself) who are not data scientists. However, the ease of use masks the complexity and mathematical nature of this field. To build a useful real-world model, it is necessary to step back and learn a bit about the underlying concepts. Fortunately, because the service is easy to use, it encourages experimentation which can help you build a useful model without a PhD.

The SMOTE module

A lot of the work of machine learning revolves around acquiring and pre-processing the training data.

Very often, training data is not balanced. Looking at two class classification, where you want to determine if an entity does, or does not have some particular property, there are often far more items which don't have the property than items which do. Going back to the customer churn example, (hopefully) far more customers will stay with the service than leave it. And considering scenarios like fraud detection, instances of fraud are likely to be extremely rare in comparison to legitimate transactions.

Azure Machine Learning provides a SMOTE module which can be used to generate additional training data for the minority class. The SMOTE stands for Synthetic Minority Oversampling Technique, a methodology proposed by N. V. Chawla, K. W. Bowyer, L. O. Hall and W. P. Kegelmeyer in their 2002 paper SMOTE: Synthetic Minority Over-Sampling Technique.

The core concept is pretty simple. The known features of items of the class which is under-represented are sampled, and new fake items with this class are generated.

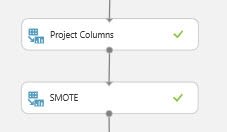

To use SMOTE in Azure, you drag a SMOTE module onto the experiment canvas, and connect it to the output of any previous modules which read and pre-process the training data.

In the module's properties panel, you select the column containing the class that you want the model to identify. This allows the module to determine what the minority class for this property is. In the example below, we are building a model which will determine an item's category.

All other columns passed into the module will be sampled by the SMOTE module.

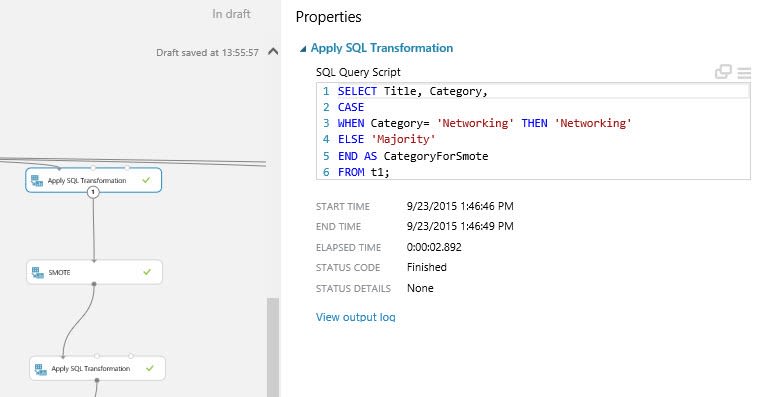

You also specify how many items should be sampled to generate a new item ('Number of nearest neighbours'), and the amount by which to increase the percentage of minority cases in the output dataset ('SMOTE percentage').

The SMOTE percentage must be at least 100, and must be provided in multiples of 100 (according to the docs, this second restriction will be removed in future). 100 will not generate any new items. 200 will double the percentage of items for the minority class in the output dataset. It's important to understand that it's the percentage of minority class items in the output dataset that will be doubled, rather than the actual number of minority items.

The module outputs the original dataset, plus the new items.

Does training data need to be balanced?

You might wonder, does imbalanced training data matter? If there are far more instances of one outcome than another, doesn't that imbalance reflect the comparative likelihood of each outcome?

There isn't a simple answer to this question (or if there is I don't know it).

Different machine learning algorithms will respond differently to an imbalanced training set. Rather than applying a hard & fast rule, it's best to carry out experiments with your training data and a range of algorithms, and identify the most effective combination for the problem you are trying to solve.

Focusing on the problem you want to solve is important. The success of a machine learning model can be measured in several ways, and some measures will be more relevant to the problem you want to solve.

Azure Machine Learning provides several metrics. These include:

- Accuracy: the proportion of correctly predicted items

- Precision: the proportion of positives that are classified correctly

- Recall: the true positive rate

- F1 measure: a combined measure of precision and recall

One effect of having an imbalanced training set is that accuracy becomes less useful as a measure of a model's success. Adapting an example from the Azure docs, if 99% of transactions are not fraudulent, then an algorithm could achieve 99% accuracy by classifying all transactions as non-fraudulent. Precision and recall become more useful metrics.

Ideally, a machine learning model would have 100% accuracy, 100% precision (e.g. every customer identified as being likely to leave was really about to leave) and 100% recall (e.g. it wouldn't miss any customers who were likely to leave). For any real-world classification problem complex enough that machine learning outperforms a rule-based approach, this is unlikely, and there is a trade off between precision and recall, which is visually represented as a precision/ recall curve. You might be more concerned with recall (it's most important not to miss any customers who are about to leave), or with precision (you want to focus your resources on retaining only those customers who seem most likely to leave). Looking at the fraud example, where a fraudulent transaction can be very damaging, recall is likely to be more important than precision.

For some algorithms, using synthetic minority oversampling will increase recall, although this will come at the cost of precision.

The authors of the original SMOTE paper found they had the best results by both over-sampling the minority class and under-sampling the majority class, when using a Ripper or a Naive Bayes algorithm.

I've found that the SMOTE module is useful for increasing the accuracy of certain multi-class classification algorithms (particularly the multi-class logistic regression algorithm), when the initial training dataset is small. This is described in the next section.

As with all machine learning, experimentation is the key to finding out whether SMOTE will work best for your problem.

Using the SMOTE module for multi-class classification

I've experimented with Azure Machine Learning using SMOTE to help predict the category of items in endjin's Azure Weekly newsletter. The newsletter provides a commentary on the week's Azure news and aggregates official and community blog posts. Generating a newsletter manually is time consuming, so we automate the repetitive parts of the process, such as categorising the blog posts.

Blog posts can have one of nine possible categories. Our training data consists of the set of items linking to blog post from back issues, along with the category they were assigned after automated classification and a human check. We use the feature hashing module to generate a set of features from the textual content of the articles. The training data is imbalanced - there are many more blogs categorised as Management and Automation than as Hybrid integration, Media Services or Networking.

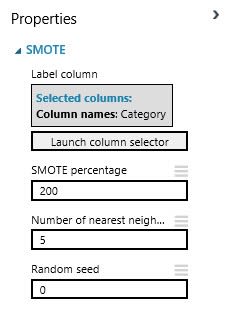

The Azure Machine Learning SMOTE module is designed for use with single class classification problems. To adapt it for a multi-class classification problem:

- For each class, use a SQL Transform module to add a new column to the training dataset which contains the minority class label for minority class items, and a dummy value for all other class items.

- Pipe this into the SMOTE module, pointing it at the new column you've added

- From the output of the SMOTE module, select all items with the minority class, limiting how many are selected if you want greater control over how many are included.

- Combine the output from steps 1-3 for all classes, using the SQL Transform module

Using SMOTE in this way increased the accuracy of my models for all multi-class classifiers, with the Logistic Regression Classifier emerging as the winner. This might have had as much to do with increasing the overall amount of training data as with balancing it.

Could I do this more simply using a custom script? Almost certainly. Over the next few month's I'll be trying to get to grips with R, and will post some updates here if I get a chance.

In the meantime, the SMOTE module has provided an easy way to learn and experiment with different ways of building my model.

Further Reading

- SMOTE: Synthetic Minority Over-Sampling Technique.by N. V. Chawla, K. W. Bowyer, L. O. Hall and W. P. Kegelmeyer

- Azure Machine Learning SMOTE docs

- http://machinelearningmastery.com/tactics-to-combat-imbalanced-classes-in-your-machine-learning-dataset/

- https://www.reddit.com/r/MachineLearning/comments/12evgi/classification_when_80_of_my_training_set_is_of/

- http://stats.stackexchange.com/questions/97555/handling-unbalanced-data-using-smote-no-big-difference

Sign up to the Azure Weekly to receive Azure related news and articles direct to your inbox or follow on Twitter:@azureweekly