Browse our archives by topic…

Machine Learning

SQLbits 2022 - The Best Bits

This is a summary of the sessions I attended at SQLbits 2022 in London, which is Europe's largest expert led data conference.

Do robots dream of counting sheep?

Some of my thoughts inspired whilst helping out on the farm over the weekend. What is the future of work given the increasing presence of machines in our day to day lives? In which situations can AI deliver greatest value? How can we ease the stress of digital transformation on people who are impacted by it?

Learning from Covid-19

Summary of key themes from the Doing Data Together conference hosted virtually by The Scotsman newspaper and Edinburgh University in November 2020. The conference agenda was pivoted to focus on the use of data to help tackle the Covid-19 pandemic. It provided a fascinating insight into the lessons learned.

How to use SQL Notebooks to access Azure Synapse SQL Pools & SQL on demand

Wishing Azure Synapse Analytics had support for SQL notebooks? Fear not, it's easy to take advantage rich interactive notebooks for SQL Pools and SQL on Demand.

Does Azure Synapse Analytics spell the end for Azure Databricks?

Have you or are you about to invest in Azure Databricks? If so, the new Spark offering in Azure Synapse Analytics is likely to have grabbed your attention and rightly so. Why is Microsoft putting yet another Spark offering on the table and what does it mean for you?

5 Reasons why Azure Synapse Analytics should be on your roadmap

For years we have been building modern cloud data solutions on Azure and helping our customers transform their use of data to drive outcomes. Here are 5 reasons why Azure Synapse Analytics might just be the service that we have been crying out for.

Recording of Azure Oxford talk on combatting illegal fishing with Azure (for less than £10/month)

Jess and Carmel recently gave a talk at Azure Oxford on "Combatting illegal fishing with Machine Learning and Azure - for less than £10 / month). The recording of that talk is now available for viewing!The talk focuses on the recent work we completed with OceanMind. They run through how to construct a cloud-first architecture based on serverless and data analytics technologies and explore the important principles and challenges in designing this kind of solution. Finally, we see how the architecture we designed through this process not only provides all the benefits of the cloud (reliability, scalability, security), but because of the pay-as-you-go compute model, has a compute cost that we could barely believe!

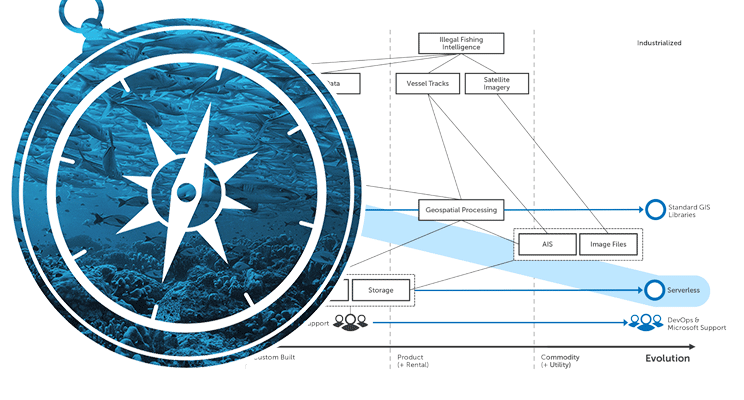

Wardley Maps - Explaining how OceanMind use Microsoft Azure & AI to combat Illegal Fishing

Wardley Maps are a fantastic tool to help provide situational awareness, in order to help you make better decisions. We use Wardley Maps to help our customers think about the various benefits and trade-offs that can be made when migrating to the Cloud. In this blog post, Jess Panni demonstrates how we used Wardley Maps to plan the migration of OceanMind to Microsoft Azure, and how the maps highlighted where the core value of their platform was, and how PaaS and Serverless services offered the most value for money for the organisation.

British Science Week - inspiring the next generation of data scientists

The theme of this year's British Science Week (6 - 15 March 2020) is "Our Diverse Planet". We'll be getting involved by speaking to school children about the work we've been doing with Oxfordshire-based OceanMind (part of the Microsoft AI for Good programme) to help them combat illegal fishing, hopefully inspiring some of the next generation of data scientists!

NDC London 2020 - My highlights

Ed attended NDC London 2020, along with many of his endjin colleagues. In this post he summarises and reflects upon his favourite sessions of the conference including; "OWASP Top Ten proactive controls" by Jim Manico, "There's an Impostor in this room!" by Angharad Edwards, "How to code music?" by Laura Silvanavičiūtė, "ML and the IoT: Living on the Edge" by Brandon Satrom, "Common API Security Pitfalls" by Philippe De Ryck, and "Combatting illegal fishing with Machine Learning and Azure - for less than £10 / month" by Jess Panni & Carmel Eve.

AI for Good Hackathon

Towards the end of last year, Microsoft invited endjin along to a hackathon session they hosted at the IET in London as part of their AI for Good initiative. I've been thinking about the event and the broader work Microsoft is doing here a lot lately, because it gets to the heart of what I love about working in this industry: computers can magnify our power to do to good.

Speaking at NDC London: Combatting illegal fishing with Machine Learning and Azure

In January 2020, Carmel is speaking about creating high performance geospatial algorithms in C# which can detect suspicious vessel activity, which is used to help alert law enforcement to illegal fishing. The input data is fed from Azure Data Lake Storage Gen 2, and converted into data projections optimised for high-performance computation. This code is then hosted in Azure Functions for cheap, consumption based processing.

Import and export notebooks in Databricks

Sometimes it's necessary to import and export notebooks from a Databricks workspace. This might be because you have some generic notebooks that can be useful across numerous workspaces, or it could be that you're having to delete your current workspace for some reason and therefore need to transfer content over to a new workspace. Importing and exporting can be doing either manually or programmatically. In this blog, we outline a way to recursively export/import a directory and its files from/to a Databricks workspace.

Demystifying machine learning using neural networks

Machine learning often seems like a black box. This post walks through what's actually happening under the covers, in an attempt to de-mystify the process!Neural networks are built up of neurons. In a shallow neural network we have an input layer, a "hidden" layer of neurons, and an output layer. For deep learning, there is simply more hidden layers which allows for combining neuron's inputs and outputs to build up a more detailed picture.If you have an interest in Machine Learning and what is really happening, definitely give this a read (WARNING: Some algebra ahead...)!

Using Databricks Notebooks to run an ETL process

Here at endjin we've done a lot of work around data analysis and ETL. As part of this we have done some work with Databricks Notebooks on Microsoft Azure. Notebooks can be used for complex and powerful data analysis using Spark. Spark is a "unified analytics engine for big data and machine learning". It allows you to run data analysis workloads, and can be accessed via many APIs. This means that you can build up data processes and models using a language you feel comfortable with. They can also be run as an activity in a ADF pipeline, and combined with Mapping Data Flows to build up a complex ETL process which can be run via ADF.

ML.NET, Azure Functions and the 4th Industrial Revolution

There is a lot of hype around AI & ML. Here's an example of using ML.NET & Azure Functions to deliver a series of micro-optimisations, to automate a series of 1 second tasks. When applied to business processes, this is what the 4th Industrial Revolution could look like.

A conversation about .NET, The Cloud, Data & AI, teaching software engineers and joining endjin with Ian Griffiths

When he joined endjin, Technical Fellow Ian sat down with founder Howard for a Q&A session. This was originally published on LinkedIn in 5 parts, but is republished here, in full. Ian talks about his path into computing, some highlights of his career, the evolution of the .NET ecosystem, AI, and the software engineering life.

Using Python inside SQL Server

Do you have a bunch of data in SQL Server that you're using ODBC/JDBC to pull data to work with in Python? Using SQL Server's Python integration, you can connect to a SQL Server instance within your preferred IDE and perform the computations on the SQL Server Machine. No more clunky data transferring. Operationalizing a Python model/script is as easy as calling a stored procedure. Any application that can speak to SQL Server can invoke the Python code and retrieve the results. Easy! This blog will provide a few, simple examples which make use of this capability to carry out some simple Python commands, so you can get up and running as quickly as possible.

Snap Back to Reality – Month 2 & 3 of my Apprenticeship

Learn what types of things an apprentice gets up to at endjin a few months after joining. You could be learning about Neural Networks: algorithms which mimic the way biological systems process information. You could be attending Microsoft's Future Decoded conference, learning about Bots, CosmosDB, IoT and much more. Hopefully, you wouldn't be in hospital after a ruptured appendix!

My first month as an apprentice at endjin

Structured apprenticeships provide a great way to build skills whilst getting real-life experience. Endjin's apprenticeship scheme has been refined over years, with an optimal mixture of training, project work, and exposure to commercial processes - a scheme which is designed to build strong foundations for a well-rounded Software Engineering consultant. This post explains the transition from university to an apprenticeship at endjin, including the types of work an apprentice could end up doing, and some examples of real-life learnings from a real-life apprentice.

2 Day Microsoft Bot Framework Hackathon with Watchfinder

We ran a two day hackathon with Watchfinder and Microsoft to build a conversational experience to automate the 'sell your watch experience'.

Welcome to an internship at endjin!

A career in software engineering doesn't need to start with a Computer Science degree. The underlying traits of problem solving, a willingness to learn and the ability to collaborate well can be built in any field. Internships provide a great way to get your foot-in-the-door in the professional world, and arm you with some real-life experience for future endeavours. This post describes an internship at endjin, including the type of work you could be asked to do and what you could learn.

AWS vs Azure vs Google Cloud Platform - Analytics & Big Data

Automating R Unit Tests With Azure DevOps

Many organisations are starting to adopt the R Programming Language for their data science and financial modelling scenarios. But just because the language is being used for modelling, doesn't mean you should write unit tests that can be exercised as part of your CI/CD pipeline. In this blog post Jess Panni demonstrates how you can run R unit tests inside Azure DevOps.

Using Postman to load test an Azure Machine Learning web service

Azure Machine Learning Studio is a fantastic service for experimentation, but you can also easily productionize your machine learning models. In this post we show how to create an Azure ML Studio web service and test it using Postman.

Automated R Deployments in Azure

Machine Learning - the process is the science

What do machine learning and data science actually mean? This post digs into the detail behind the endjin approach to structured experimentation, arguing that the "science" is really all about following the process, allowing you to iterate to insights quickly when there are no guarantees of success.

Embracing Disruption - Financial Services and the Microsoft Cloud

We have produced an insightful booklet called "Embracing Disruption - Financial Services and the Microsoft Cloud" which examines the challenges and opportunities for the Financial Service Industry in the UK, through the lens of Microsoft Azure, Security, Privacy & Data Sovereignty, Data Ingestion, Transformation & Enrichment, Big Compute, Big Data, Insights & Visualisation, Infrastructure, Ops & Support, and the API Economy.

Machine Learning - mad science or a pragmatic process?

This post looks at what machine learning really is (and isn't), dispelling some of the myths and hype that have emerged as the interest in data science, predictive analytics and machine learning has grown. Without any hard guarantees of success, it argues that machine learning as a discipline is simply trial and error at scale – proving or disproving statistical scenarios through structured experimentation.