AWS vs Azure vs Google Cloud Platform - Networking

Choosing the right cloud platform provider can be a daunting task. Take the big three, AWS, Azure, and Google Cloud Platform; each offer a huge number of products and services, but understanding how they enable your specific needs is not easy. Since most organisations plan to migrate existing applications it is important to understand how these systems will operate in the cloud. Through our work helping customers move to the cloud we have compared all three provider's offerings in relation to three typical migration scenarios:

- Lift and shift - the cloud service can support running legacy systems with minimal change

- Consume PaaS services - the cloud offering is a managed service that can be consumed by existing solutions with minimal architectural change

- Re-architect for cloud - the cloud technology is typically used in solution architectures that have been optimised for cloud

Choosing the right strategy will depend on the nature of the applications being migrated, the business landscape and internal constraints.

In this series, we're comparing cloud services from AWS, Azure and Google Cloud Platform. A full breakdown and comparison of cloud providers and their services are available in this handy poster.

We have grouped all services into 9 categories:

- Compute

- Storage and Content Delivery

- Database

- Analytics & Big Data

- Internet of Things

- Mobile Services

- Networking

- Security & Identity

- Management & Monitoring

- Hybrid

In this post we are looking at...

Networking

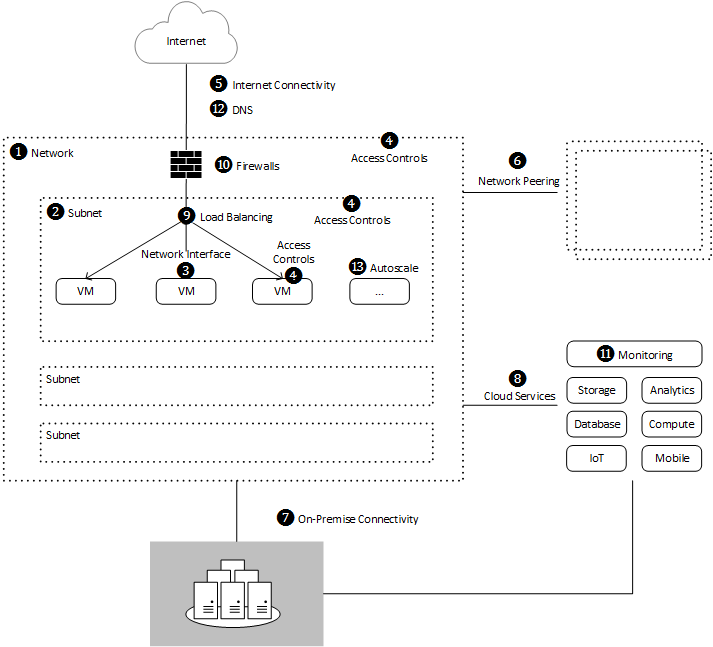

On-premise applications usually run in locked down environments that isolate servers, ensure availability, and provide necessary barriers from unauthorised access or attack. For these solutions to run in the cloud similar controls are necessary. While cloud provides opportunities to adopt managed services it also brings additional challenges such as providing secure and performant connectivity to on-premise networks and native cloud services. A traditional application running in the cloud may look like this:

Let's see how our three cloud providers compare...

AWS

1. Networks

Virtual Private Cloud (VPC) is the marketing term given to the set of core networking services in AWS. A VPC represents a single network with dedicated IP range containing EC2 instances and other network resources. AWS allows up to 5 VPCs per region, although this can be increased by request.

Low latency networks of up to 10 Gigabits can be achieved by having instances assigned to the same Placement Group. This ensures that instances are running in close physical proximity for maximum performance but at the expense of availability should underlying physical hardware fail.

2. Subnets

Subnets may be configured to group related EC2 instances within a VPC. Traffic between instances and subnets is governed by route tables that define the set of rules determining the flow of information between components with the VPC. Up to 200 subnets can be configured per VPC by default, this limit can also be increased by request.

3. Network Interface

By default, each instance in a VPC has a single Elastic Network Interface (ENI) providing a private IP and optional public IP address. Additional ENIs can be added, for example, to provide separate SSH access from a private subnet. The number of Elastic Network Interfaces and IP addresses is governed by the instance type. ENIs can be reassigned to different instances at any time so IP addresses need not be tied to a specific instance.

4. Access Controls

Route tables define the rules which govern whether traffic can flow between specific subnets and other VPC resources. If a matching route is not explicit specified, traffic will not be permitted between the source and destination.

Network ACL (Access Control Lists) control inbound and outbound traffic to a specific subnet. Assuming a route has been defined, traffic must then satisfy the collection of rules (protocols and ports) defined in the ACL for the subnet.

Security Groups provide an additional layer of security at the instance level. Security Groups are assigned to ENIs and define the traffic permitted to reach the target instance. Each ENI can have up to 5 Security Groups.

5. Internet Connectivity

To allow instances with public IP addresses to be connected to the internet, an Internet Gateway must be configured with a route between the public facing subnet and the internet gateway. By default, a VPC is pre-configured with an Internet Gateway and a default public facing subnet. Internet Gateway is fully managed and scales transparently according to demand.

NAT Gateway allows instances in a private subnet to connect to the internet without allowing connections to be initiated from the internet. NAT Gateway is charged per instance per hour and per Gigabyte of data processed.

6. Network Peering

VPCs allow instances in one VPC to communicate with instances in other VPCs as if they were on the same network. The VPCs can belong to the same or different AWS accounts. Peering is configured by the owner of one VPC requesting access to a second VPC. Once accepted, routes can be configured to allow traffic to flow to the IP range of the target VPC.

7. On-Premise Connectivity

AWS enables IPSec VPNs by using Virtual Private Gateway. Virtual Private Gateway supports connections to a range of hardware VPN appliances configured on the target on-premise network. It provides both static routing and dynamic routing for devices supporting Border Gateway Protocol (BGP). Virtual Private Gateway is charged per VPN-connection per hour.

Unlike Azure, Virtual Private Gateway only supports VPN connections between sites. Establishing a VPN from a device (point-to-site) to a network running in AWS requires running a third party VPN solution such as OpenVPN on one or more EC2 instances.

VPN CloudHub enables external networks to connect to each other via AWS using a hub-and-spoke model. This can be useful when multiple external office sites need to connect.

For dedicated high speed connections, AWS has Direct Connect. Direct Connect provides 1 to 10 Gbps connections directly from an organisation's internal network to one of the AWS Direct Connect locations around the world. Organisations can then directly access AWS services for the region associated with the location. If you connect into the US it is possible to access services across all US regions from the same connection. Unlike Azure, there is no option to access services hosted in other regions outside the US from a single connection. Connections are configured via Virtual Interfaces which connect to a VPC or public endpoint. By default, each connection can support up to 50 Virtual Interfaces and up to 10 connections per region although this limit can be raised.

Slower connection speeds are available through the AWS Direct Connect Partner network.

Pricing is based on the number of ports (connections) per hour and the speed of the connection plus data egress charges.

8. Cloud Services

It is possible to connect to S3 from instances running in a private subnet without the need for a NAT, internet gateway or virtual private gateway using VPC Endpoints. VPC Endpoints are routable resources that provide access to other AWS services not part of the VPC. Endpoint Policies can be attached to endpoints to restrict access to the service via IAM. At the moment only S3 is supported but there are plans to allow access to other services in the future. There is no additional charge for using VPC endpoints.

9. Load Balancing

Elastic Load Balancing can be used to direct traffic across a number of EC2 instances to create fault tolerant solutions. The service is fully managed and will automatically scale to meet demand. There are two types of load balancers available. Classic Load Balancer offers general purpose Layer 4 (TCP/IP) and Layer 7 (HTTP) routing. Application Load Balancer is designed specifically to support modern application workloads such as containerised applications, web sockets and HTTP/2 traffic. Both load balancers have built-in health monitoring, operational management through CloudWatch, logging, SSL termination and sticky sessions. Pricing is based on the number of deployed load balancers per hour plus a charge for the amount of data processed.

Where more control is required, a range of third party load balancing appliances such as Kemp LoadMaster and Barracuda Load Balancer are available from the AWS Marketplace.

DNS based load balancing is also provided through Route 53. Route 53 provides a range of routing and load balancing services such as directing weighted portions of traffic to different endpoints, choosing endpoints based on latency, routing traffic based on the geographical location of the user, and failing over to an alternate endpoint/region if the intended endpoint becomes unavailable. Route 53 can also use regular health checks to send alerts and notifications via Cloud Watch.

10. Firewalls

AWS WAF is a managed web application firewall that protects applications from common web exploits, attack patterns or unwanted traffic. Of the three providers, AWS is the only one to offer a fully managed WAF. Rules can be setup to block well known threats such as SQL injection or cross-site scripting, as well as custom rules based on the request payload. In addition to blocking requests, traffic can be monitored according to the rules defined. Pricing is based on the number of ACL (access control lists) defined and the number rules.

In addition to the managed AWS WAF, a number of web application firewalls are available through the AWS Marketplace. These services are pre-configured EC2 instances from well known vendors which often come with pay-as-you-go licences.

11. Monitoring

Network traffic flows can be monitored using VPC Flow Logs. Once enabled for a VPC, subnet or network interface, traffic will be sent CloudWatch for monitoring.

12. DNS

Route 53 is a DNS service that resolves user requests and ensures they are directed to the correct infrastructure. Route 53 is a Start of Authority naming service, meaning it is the authority for mapping domain names to IP addresses. As discussed above, Route 53 also provides a range of configurable routing strategies.

Route 53 Traffic Flow is a graphical user interface for defining and visualising complex routing policies. It is also possible to register domain names directly from Route 53 either through the console or via an API.

Pricing is based on the number of hosted zones (domain name), after 25 hosted zones a discount applies. An additional charge is applied per billion queries per month according to the routing policy being used (geo routing being the most expensive). If you are using Traffic Flow then there are additional charges for each policy defined. The first 50 health checks are free after which additional charges apply.

13. Autoscale

It is possible to autoscale EC2 instances within a VPC according to a set of performance metric thresholds defined. There are no additional fees for autoscaling other than the underlying EC2 charges.

Azure

Azure provides two different models for deploying network services - Resource Manager and Classic. Since Resource Manager is recommended for most scenarios it will be the focus for this post.

1. Networks

Azure Virtual Networks provide services for building networks within Azure. This includes the ability to create virtual networks (VNets) in which to host Virtual Machines, virtual appliances and PaaS offerings such Cloud Services and App Service Environments.

2. Subnets

As you would expect, network resources can be grouped by subnet for organisation and security. Each subnet can be assigned a route table to define outgoing traffic flow, for example to route traffic through a virtual appliance.

3. Network Interface

Each virtual machine can be assigned one or more network interfaces (NICs). Each Network interface is attached to a subnet, when the instance starts up a private IP address is dynamically assigned from the Azure DHCP. It is possible to assign a static IP and an optional public IP address to a NIC. It is also possible to assign more than one NIC to a virtual machine, however, there are limits on the number of NICs based on the VM size.

4. Access Controls

Traffic can be permitted or denied at the NIC or subnet level via Network Security Groups (NSGs). An NSG contains a set of prioritised ACL rules that explicitly grant or deny access. Each subnet, NIC or role instance can have up to 1 NSG. Rules are defined according to the direction of traffic, protocol, source port, destination port, and IP address suffixes. Each region per subscription can have up to 400 NSGs, and each NSG can have up to 500 rules.

5. Internet Connectivity

Azure routes traffic to and from the internet via an infrastructure level gateway. Routing rules are set up to control whether traffic is permitted to flow to this gateway. Unlike AWS, there is no option to explicitly omit the gateway.

Default system routes permit virtual machines with public IP addresses to communicate over the public internet, this can be overwritten as required. Additional routes may be added to redirect outbound packets through a network appliance or to drop packets completely. NICs having only private IP addresses can be reached from the internet using an Azure Load Balancer, in this configuration the VM will be accessible via the public IP address assigned to the load balancer which then performs the appropriate network address translation (NAT). NAT rules on the load balancer specify which protocols / ports are permitted. For this reason, you may wish to use a load balancer even if targeting a single VM.

6. Network Peering

Microsoft have recently announced support for network peering between VNETs. Peering can be configured between VNets in the same region providing low latency communication between VMs on different VNETs. Network peering can be configured across subscriptions and can also be used to connect resources in a Classic VNET with the newer ARM based VNETs.

An alternative to network peering is to connect two VNETs using VPN Gateway. VPN Gateway allows VNET to VNET connections in the same or across multiple regions.

7. On-Premise Connectivity

Azure allows connectivity to on-premise networks through VPN Gateway. Site-to-site VPNs can be configured over the public internet or via a dedicated private connection using Express Route. VPN Gateway also supports multiple sites connecting to the same Azure VNET as well as point-to-site VPNs. Both static routes and dynamic BGP routes are supported.

Pricing for VPN Gateway is based on the chosen SKU and the amount of outbound data. The highest SKU supports upto 200 Mbps over an internet connection, 2000 Mbps over ExpressRoute and 30 IPsec tunnels.

ExpressRoute provides connections up to 10 Gbps to Azure services over a dedicated fibre connection. An ExpressRoute connection connects an on-premise network to the Microsoft cloud including all datacenters locations within the same continent as the peering location. Traffic between datacenters is carried over the Microsoft cloud network. ExpressRoute can also be used to connect to Office 365.

ExpressRoute Premium increases the number VNET connections and route configurations available, it also provides global connectivity to all Azure regions from a single peering location.

Microsoft offer metered plans which are based on the connection speed and the amount of outbound data transferred. Unlimited plans are also available.

8. Cloud Services

It is currently possible to add Cloud Services and App Service Environments to VNETs allowing PaaS applications to be used in non-internet addressable configurations. It is also possible to grant access from standard App Services running outside the VNET to resources within in a VNET.

An alternative approach unique to Microsoft is Azure Stack. Azure Stack allows organisations to use Azure services running in private data centers. While not strictly 'cloud' it does provide an interesting and unique hybrid alternative for organisations who cannot move workloads to public cloud infrastructure but who still want the agility of PaaS services. Azure Stack is currently in technical preview.

9. Load Balancing

Azure Load Balancer provides layer 4 load balancing, NAT and port forwarding across one or more VMs within a VNET. Load Balancer can be configured as an internet facing load balancer with a public IP (VIP) or as an internal (private) load balancer. The load balancer ensures traffic is sent only to healthy nodes, it does this by periodically probing an endpoint on the VM. It is possible to define custom probes where more control is required. Log Analytics can be used for audit and alerts across the load balanced set. Load Balancer is a free service but cannot be used with A-series VMs.

Application Gateway is a Layer 7 (HTTP/HTTPS) load balancer. It can be used as an internet facing or private load balancer providing round robin, url based routing or cookie affinity traffic distribution. Application Gateway uses HTTP probes to monitor health and can provide SSL termination. Pricing is based on the time that Application Gateway is running and the amount of data transferred.

Traffic Manager is a DNS based traffic routing solution. It provides a number of distribution policies including weighted round robin, automatic fail-over to healthy endpoints, and routing traffic to the nearest location. Traffic Manager can route traffic to services in any region as well as non-Azure endpoints. Health checking is achieved by periodically polling an HTTP endpoint. Pricing is based on the number of DNS queries received, with a discount for services receiving more than 1 billion monthly queries. There is an additional charge for each health check endpoint.

For other load balancing options, a range of third party load balancing appliances are available from the Azure Marketplace.

10. Firewall Appliances

Azure's infrastructure provides a level of built-in DDoS and IDS protection for all Azure inbound traffic. Network Security Groups provide core firewall controls at the individual VNET, subnet and NIC level. Where a more advanced firewall solution is required, Azure Marketplace offer a range of firewall options. Unlike AWS, Azure does not provide a managed Web Application Firewall offering.

11. Monitoring

Azure Log Analytics provides monitoring insights across Azure Load Balancer, Application Gateway and Network Security Group events. By enabling the VM Log Analytics Extension, performance metrics can be surfaced in Log Analytics and Visual Studio.

12. DNS

Azure DNS is an authoritative DNS service that allows users to manage their public DNS names. Being an Azure service it allows network administrators to use their organisation identity to manage DNS while benefiting from all the usual access controls, auditing and billing features. Pricing is based on the number of DNS zones hosted in Azure and the number of DNS queries received.

13. Autoscale

Virtual machine scale sets allow VM instances to be automatically added or removed from a VNET based on a set of rules. Rules can be defined on a range of criteria includnig performance metric thresholds, day/time and message queue size. When using Azure Load Balancer, new instances will be automatically registered with or removed from the load balanced set.

Google Cloud Platform

1. Networks

Cloud Virtual Network is Google's answer to networking in the cloud. Cloud Virtual Networks can contain up to 7000 virtual machine instances. Unlike AWS and Azure, networks can encompass resources (subnets) deployed across multiple regions and reduces the need for complex VPN and network peering configuration.

2. Subnets

Subnets group related resources, however, unlike AWS and Azure, Google do not constrain the private IP address ranges of subnets to the address space of the parent network. It is therefore possible to have one subnet with a range of 10.240.0.0/16 and another with 192.168.0.0/16 on the same network. While the network can span multiple regions, individual subnets must belong to a single region. Default network routes allow connectivity to/from the internet to each subnet and between subnets. Additional routes can be added to override these defaults where required.

3. Network Interface

Each virtual machine instance has a dynamic private IP address allocated according to the address range of its subnet. An optional public IP address can also be specified. Both AWS and Azure support multiple network interfaces allowing more IP addresses than Google currently supports. This could be a problem when planning to run virtual appliances that require multiple network interfaces. Like the other providers, static public IP addresses can be reserved and assigned to instances if required.

4. Access Controls

Each network comes with a firewall that can be configured with rules to control the traffic that is accepted by a resource or set of resources within the network. Each rule (ACL) defines permitted traffic according to source IP, destination IP, ports and protocol. It is also possible to tag specific resources and define rules against these tags.

5. Internet Connectivity

Like Azure, Google has a built in internet gateway that can be specified from routing rules. Default system routes allow instances with public IP addresses to communicate over the internet. Additional routes may be added to redirect outbound packets through a network appliance if required. Protocol forwarding allows traffic intended for a public IP address to be sent to an instance with a private IP address. Protocol forwarding pricing is based on the number of rules configured and the amount of data processed.

6. Network Peering

There is no direct GCP to GCP peering option available with Google Cloud Platform, unlike Azure and AWS. The only option to connect networks is to us Cloud VPN. However, Google's flexible approach for IP ranges and cross region networking support largely removes the need for network peering.

7. On-Premise Connectivity

Virtual Private Network supports IPSec VPN connections between Virtual Private Networks and on-premise networks. Virtual private networks support static routes only, however by using Cloud Router it is possible to take advantage of dynamic (BGP) routing.

Pricing for VPNs is based on the number of tunnels per-hour plus the cost of a public static IP address, if required.

Cloud Interconnect provides a fast dedicated connection to GCP services available through a range of service providers to one or more of Google's edge locations.

Unique to Google is the option to directly peer to one of Google's edge locations. This is only an option for organisations who have the necessary infrastructure to support direct connections.

Organisations can also access Google G Suite (formally Google Apps for Work) via Interconnect.

Egress data charges apply according to whether data movement is within the same region as the peering location or across regions plus the service provider charges. For direct peering, no service provider is required so customers only pay for data egress.

CDN Interconnect allows fast and reliable connectivity between GCP and selected CDN providers. This can be useful when populating an external CDN with large or frequent data from Google Cloud.

8. Cloud Services

Access to PaaS services such as App Engine, Cloud Storage or Cloud SQL is only available via standard public endpoints.

9. Load Balancing

Google Cloud Load Balancing provides traditional and HTTP load balancing:

Network load balancing relies on forwarding rules to route traffic from a public IP address to a target pool containing the instances to load balance. Any UDP/TCP traffic can be load balanced based on source, destination port and protocol, ensuring that traffic from the same connection reaches the same server. Health checks are associated with the target pool and determine whether traffic should be routed to a given instance or not. Pricing is based on the number of forwarding rules and the amount of data processed.

HTTP(S) Load Balancing provides global load balancing for modern web based applications. Traffic is routed to the nearest instance group to the calling user via an anycast IP address and SSL can be terminated at the load balancer. Distribution strategies include URL and content based routing.

Internal load balancing allows internal traffic to be distributed across a set of back-end instances without the need for a public IP address.

10. Firewalls

In addition to the built-in firewall access control lists, third party firewall appliances can be configured to intercept incoming and outgoing traffic. The number of firewall virtual appliances available on Launcher (GCP's Marketplace) is very limited when compared with Azure and AWS.

11. Monitoring

Google will automatically log and monitor networking events and audit changes to environments. Logs and monitoring is available through Stackdriver.

12. DNS

Like AWS and Azure, Cloud DNS allow organisations to manage their DNS and associated records along with the rest of their cloud services. Pricing is based on the number of zones and queries (per billion).

13. Autoscale

Google supports auto scaling groups of compute instances based on various run time performance statistics. Scaling can be triggered through metrics such as CPU utilisation, HTTP load balancing throughput, Stackdriver monitoring alerts or in response to Cloud Pub/Sub queue metrics.

Conclusion

In the past AWS was generally considered the go-to-cloud for organisations with IaaS workloads running in locked down networking environments. However, this is no longer the case as all three providers offer a similar and compelling set of capabilities. There are a few notable differences such as AWS managed WAF and Azure's PaaS networking support. There are of course subtleties between services that this article cannot give justice to, but what is now clear is that networking is unlikely to be the differentiating factor when it comes to choosing a cloud provider, for most organisations.

Next up we will be looking at Security & Identity.