Streamline .NET Dependency Management with NuGet Meta Packages

This post discusses how we sought to resolve a particular issue with managing updates to NuGet dependencies in our testing tool-chain. The rest of the post will cover that that journey in detail, but if you just want the TL;DR:

A 'meta' NuGet package, also called a 'virtual' package, is one that only declares other package dependencies (rather than any assemblies or contents of its own).Grouping NuGet packages that you frequently reference together (e.g. those supporting your testing tool-chain) can simplify setting-up new projects as well as how you upgrade those package versions for existing projects - using tools like Dependabot, for example

When consuming dependencies in such an indirect way, there are some subtleties to the

PackageReferenceconfiguration options to ensure that all the assets provided by the package are available to projects further up the dependency tree (documentation link)

Recently we've been migrating to GitHub's integrated version of Dependabot and taking the opportunity to use GitHub Actions to automate even more of our dependency management process - however, that will have to be a topic for another blog post!

We maintain 30+ open source projects that weave a web of inter-related dependencies, so having a process that can efficiently and reliably cascade these updates all the way up the dependency tree is very important for us. During this work we realised that updates to our testing tool-chain (SpecFlow and NUnit) were such that it was not possible for Dependabot to automate them.

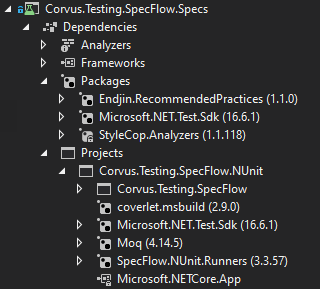

Below is a screenshot showing a typical SpecFlow specifications project in Visual Studio, illustrating how it directly references several packages:

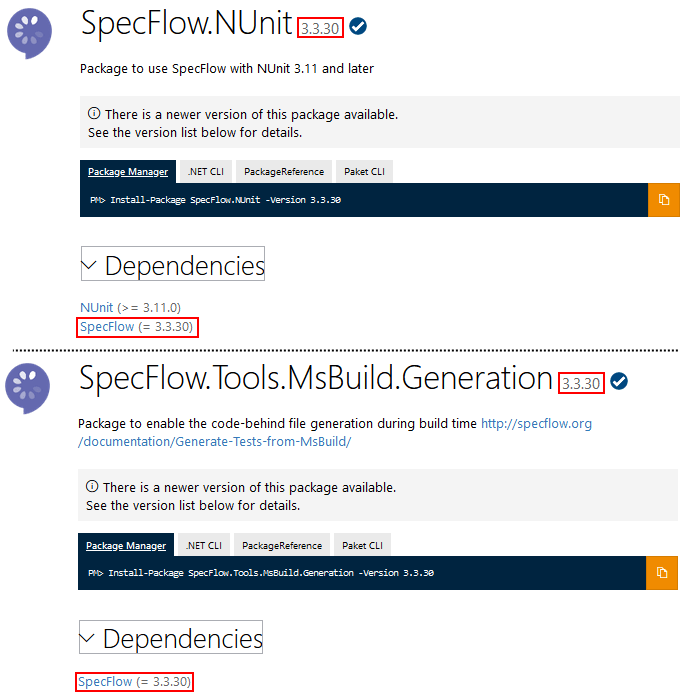

In addition to this being a few extra clicks when setting-up a new project, two particular packages were preventing us from using Dependabot to bump their versions automatically (and thus bringing any cascading update as part of an automated process to a screeching halt!):

These packages are individually referenced but they are each bound to a specific version of the SpecFlow package:

Dependabot would create a separate pull request (PR) for each package, however, in the branch created for the PR our CI build would fail due to a SpecFlow version mismatch.

In the PR to bump SpecFlow.NUnit, the build would fail due to a SpecFlow mismatch with SpecFlow.Tools.MsBuild.Generation - whilst in the SpecFlow.Tools.MsBuild.Generation PR the build would fail due to the mismatch with SpecFlow.NUnit.

We needed a way to get those dependencies bumped at the same time (i.e. in the same PR).

Our solution to this problem was to author a 'meta' NuGet package (sometimes called a 'virtual' package). Such a package doesn't deliver any of its own code (or other assets) but acts as a wrapper around a related set of other dependencies - in this case our testing tool-chain.

We discovered an existing meta package provided by the SpecFlow team SpecFlow.NUnit.Runner, which addressed part of the problem. This combined with a change made in more recent versions of SpecFlow to bundle SpecFlow.Tools.MsBuild.Generation with the SpecFlow.NUnit addressed the Dependabot conflict issues:

However, we wanted to include some additional packages to streamline the rest of our testing tool-chain.

Initial experiments were with a standalone .nuspec file, but we also wanted to include our main SpecFlow support library Corvus.Testing.SpecFlow, so in the end we created a new project in the same solution and crafted the required package dependencies. These included the SpecFlow meta package mentioned above as well as our default code coverage and mocking tools:

<ItemGroup>

<PackageReference Include="SpecFlow.NUnit.Runners" Version="3.3.57" />

<PackageReference Include="coverlet.msbuild" Version="2.9.0">

<PackageReference Include="Moq" Version="4.14.5" />

<PackageReference Include="Microsoft.NET.Test.Sdk" Version="16.6.1" />

</ItemGroup>

<ItemGroup>

<ProjectReference

Include="..\Corvus.Testing.SpecFlow\Corvus.Testing.SpecFlow.csproj" />

</ItemGroup>

Using dotnet pack to build the NuGet package worked and updating the other projects to reference this one project instead of the other multiple packages seemed to work too. Well... the build worked but unfortunately running the tests in the solution was another matter!

The tests failed in a rather cryptic way:

Error Message:

System.InvalidOperationException : Result collection has not been started.

Stack Trace:

at TechTalk.SpecFlow.CucumberMessages.TestRunResultCollector

.CollectTestResultForScenario(ScenarioInfo scenarioInfo, TestResult testResult)

at TechTalk.SpecFlow.Infrastructure.TestExecutionEngine.OnAfterLastStep()

at TechTalk.SpecFlow.TestRunner.CollectScenarioErrors()

at Containers.NoContainerFeature.ScenarioCleanup()

at Containers.NoContainerFeature.ContainerNotPresent() in

C:\Corvus.Testing\Solutions\Corvus.Testing.SpecFlow.Specs\Containers\NoContainer.feature:line 8

It turned out that although our project appeared to build successfully with this new package, the results did not satisfy the test runner's requirements. Those errors were telling us that something catastrophic had happened in SpecFlow land.

If you've used SpecFlow, you'll know that it utilises code generation to turn the text-based feature files into executable specifications - so this was a rather large clue.

When referencing NuGet packages there are some less commonly-used settings that can alter the dependency's behaviour and the extent to which it is available further up the dependency tree - this page proved a treasure-trove of such information.

According to the page above, by default the 'build' package assets are marked as private (along with 'contentfiles' and 'analyzers') - meaning whilst they will be available to the project that directly references the package (in this case our meta package project), they won't be available to any of its dependants (i.e. our specifications project where the tests reside).

Given SpecFlow's compile-time code generation features, it is understandable why the absence of the 'build' asset type might cause issues further up the dependency tree.

Armed with that knowledge we can override the PrivateAssets setting to ensure the 'build' assets are available (coverlet has similar requirements):

<ItemGroup>

<PackageReference Include="SpecFlow.NUnit.Runners" Version="3.3.57">

<PrivateAssets>contentfiles; analyzers</PrivateAssets>

</PackageReference>

<PackageReference Include="coverlet.msbuild" Version="2.9.0">

<PrivateAssets>contentfiles; analyzers</PrivateAssets>

</PackageReference>

<PackageReference Include="Moq" Version="4.14.5" />

<PackageReference Include="Microsoft.NET.Test.Sdk" Version="16.6.1" />

</ItemGroup>

<ItemGroup>

<ProjectReference

Include="..\Corvus.Testing.AzureFunctions.SpecFlow\Corvus.Testing.AzureFunctions.SpecFlow.csproj" />

</ItemGroup>

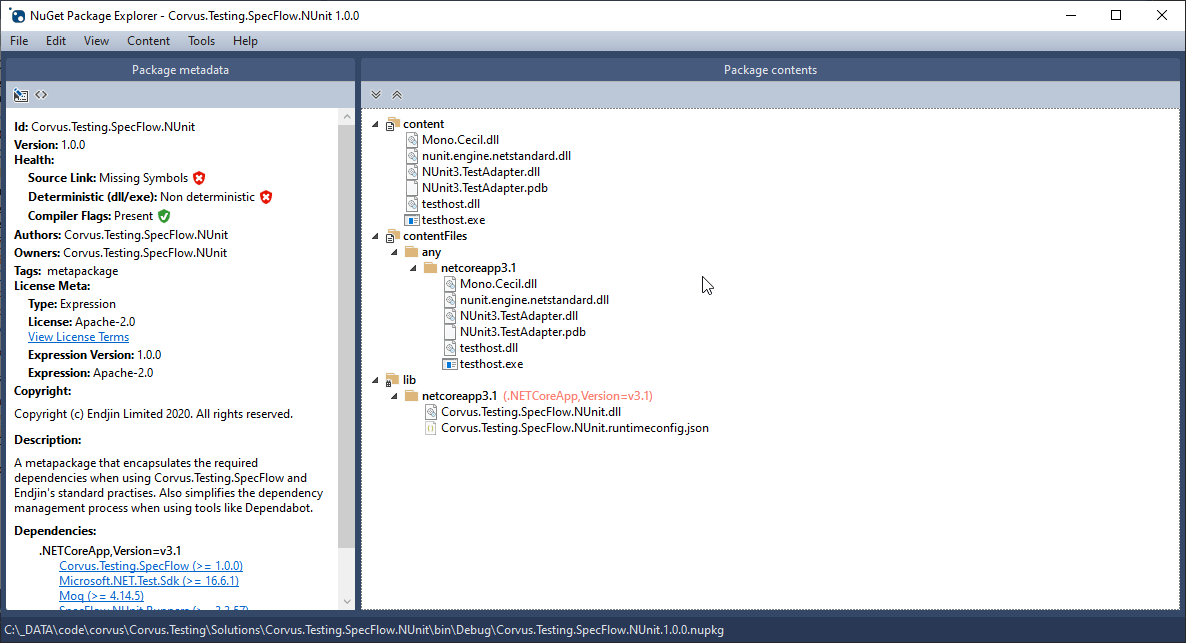

Now that the package is functionally correct we just have some tidying-up to ensure that the package doesn't contain anything extraneous. Inspecting the built package in NuGet Package Explorer shows us the following:

Here we are seeing:

- outputs from the dummy C# project we're using to define the meta package

- content files from some of the package dependencies

When prototyping the meta package using a .nuspec file we had been able to have an empty package that just had dependencies - so how can we coerce our project file to create a similarly sparse package?

Adding the following MSBuild properties to the project file will help:

<IncludeBuildOutput>false</IncludeBuildOutput>

<IncludeContentInPack>false</IncludeContentInPack>

This changes the built package nicely:

Having added the above properties we now get the following warning when building the package:

warning NU5128: - Add lib or ref assemblies for the netcoreapp3.1 target framework

This is NuGet warning us that our package contains nothing for a consuming project to reference, which in most cases would be a useful warning. However, given that we're building a meta package this is to be expected so let's suppress that warning for this project by adding the following property to the project file:

<NoWarn>$(NoWarn);NU5128</NoWarn>

NOTE: If you're interested in more details about this warning then head over here for the GitHub issue discussion.

We can now compare the dependencies of our specifications project after all this work and see that its direct dependencies are greatly simplified:

| Before | After |

|---|---|

|

|

In summary, having this meta package has allowed us to encapsulate our testing tool-chain in a single NuGet package. Going back to our original problem, where Dependabot was unable to bump the versions of our SpecFlow references, we are now in the following much improved situation:

- The meta package project has a single reference to the SpecFlow dependencies (

SpecFlow.NUnit.Runners) which allows Dependabot to bump the previously problematic references in a single PR - Other dependencies for the meta package are independent of each other so Dependabot can bump them as it would normally

- The specifications projects have a single reference to the meta package, so any changes resulting from the previous bullet points can be integrated via the normal Dependabot process

If you're interested you can get more details by looking at our Corvus.Testing open source project.