How to use Axios interceptors to poll for long running API calls

This post looks at how Axios interceptors can be used to centralise polling logic in your UI application for long-running async API calls. Many frameworks (including endjin's Marain.Operations framework and Azure Durable Functions), implement the async HTTP API pattern to address the problem of coordinating the state of long-running operations with external clients.

Typically, an HTTP endpoint is used to trigger the long-running action, returning a status endpoint that the client application can poll to understand when the operation is finished.

When the calling client is a user facing application - for example a web UI - typically the client will want to wait to see what happens with the long-running operation, so that it can inform the user if something went wrong.

In a modern single-page web application, backed by an async RESTful API this requirement is fairly common, and the rest of this post looks at how to simplify and centralise the UI logic required to work with this API pattern.

The background

This example specifically describes an approach based on a Nuxt.js application (a framework built on top of Vue.js), using the @nuxt/axios module as a JavaScript HTTP client. However, the principles and approach apply to any JavaScript client that can use the npm Axios module.

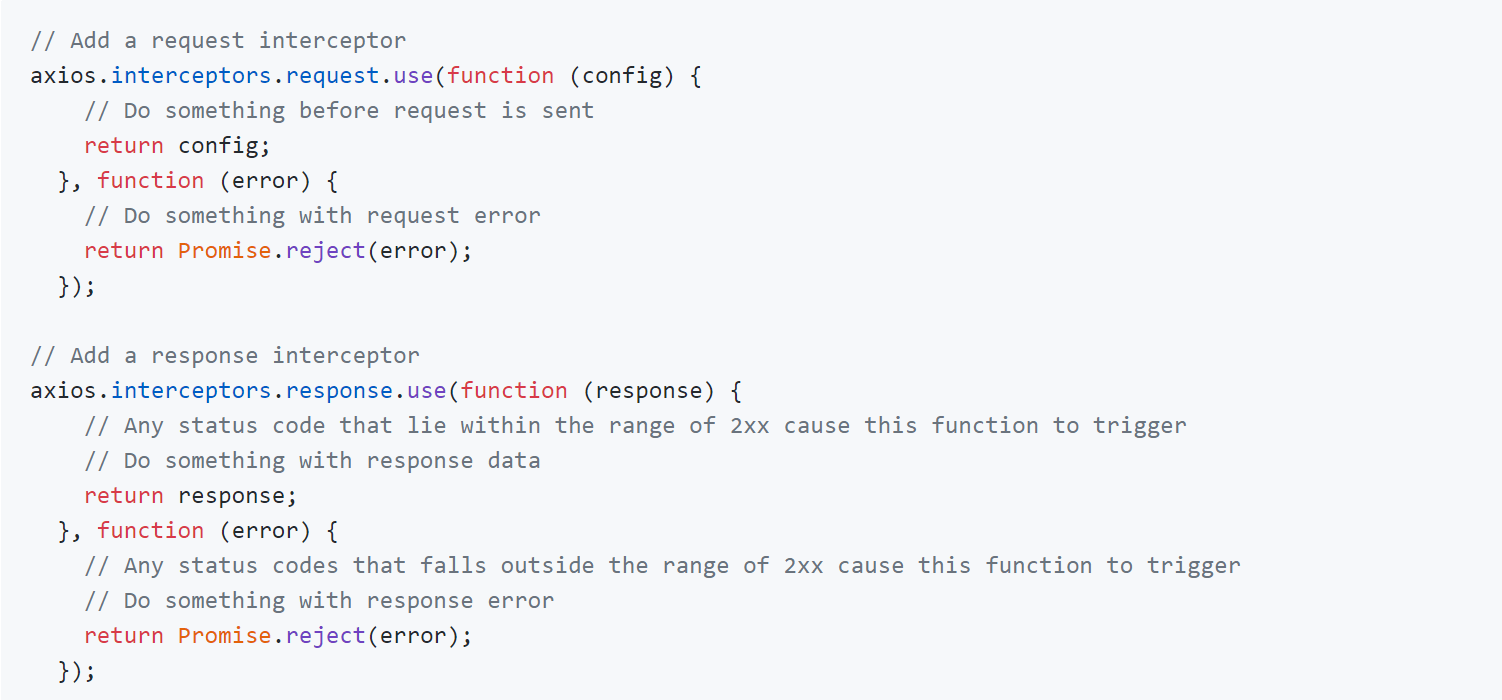

It's based around the built in support for interceptors within axios, which are centralised hooks that will run on any request/response/error. They can be configured easily at the time of configuring your axios module:

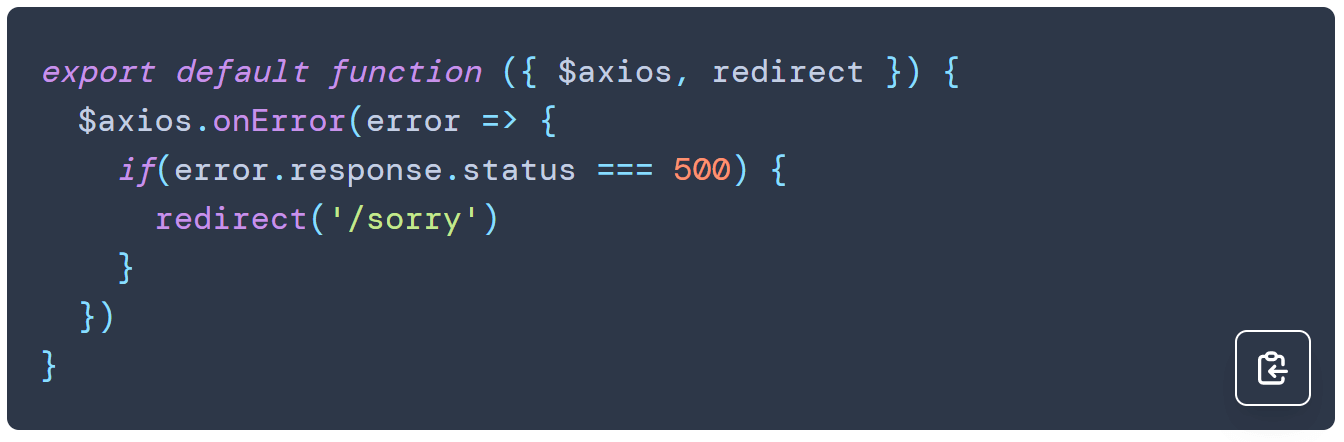

The Nuxt-specific version of the module takes this a step further, exposing helpers that can be used inside a plugin to extend the behaviour of the axios module:

These interceptors can be used to define our polling logic, meaning it can be centralised so that any API calls will automatically follow the same pattern.

The approach

The long-running async API pattern works as follows:

- The client application sends an HTTP request to trigger an long-running operation - this is typically going to be limited to actions that perform some kind of state update i.e. a POST or a PUT request

- The response will return an HTTP 202 Accepted status, saying the request has been queued for processing

- The response will also include a Location HTTP Header, specifying the URI of the status endpoint

- The client application will poll the status endpoint to retrieve the status of the operation (Waiting, Running, Succeeded, Failed etc).

- Once the operation has completed, the status endpoint will typically return another Location HTTP Header, specifying the URI of the resulting resource (i.e. the resource that has been created/updated)

- The client application issues a request to this URI to retrieve the resource

The following solution applies that pattern inside the Axios interceptor/helper function so that any code that issues HTTP requests will automatically follow the steps above.

The solution

Once the interceptor logic is in place, any requests that we need to make that return an HTTP 202 code will automatically be polled. The calling code will receive the response from the resulting resource location, without having to know or care about the long-running operation, as shown in the following example: