The Public Health England Test and Trace Excel error could have been prevented by this one simple step

Let's be clear - it wasn't Microsoft Excel that caused the loss of 16,000 Covid-19 test results at Public Health England in October 2020. Despite the media commentary suggesting otherwise, blaming the technology for the data truncation issues is like blaming your iPhone for not being waterproof after you've dropped it down the toilet. To put it simply, the data loss was caused by human error, and a basic lack of understanding of the tools and processes that had been put in place to gather, prepare and present the data.

But while the writing of this post has been heavily influenced by recent events, what happened at Public Health England isn't a unique case. If you're responsible for business, technology or data programmes, you're most likely aware of other similar high profile cases involving, for example, a data breach, or major platform outage. So, if any of the commentary has resonated - maybe you're worried about ensuring quality, or reducing risks in your own projects - then this post should help to get you thinking about what you should at least be thinking about, and describes a simple process that you can use to add confidence to your own critical initiatives.

Identifying undesired outcomes

From the outside, it's seems like a fair assumption that the processes put in place to gather and prepare the data were driven by time and budget constraints. This resulted in using the lowest common denominator in tooling - CSV files and Excel. There wasn't time to build a bespoke automated system and roll it out to all the hospital trusts and testing laboratories, so the team worked with what they already had.

But when embarking on any critical initiative - be it a nationwide data gathering exercise, a new business process, or technology migration - you need to understand the nature of the risks involved that could impact your success, and the likelihood of those risks becoming a reality.

The missing step in the Test and Trace data ingestion process was identifying what could go wrong.

A good way to discover these undesired outcomes is to ask yourself some fairly blunt questions, like "what keeps you up at night?" or "what could get you fired?". In the Covid data loss scenario, these seem pretty obvious:

- Incorrect information being presented to the public, causing confusion and lack of confidence in the Government's strategy

- Incorrect information being presented to the Test and Trace teams, causing potential positive cases to go unidentified

- Incorrect information being presented to local authorities, impacting decision-making on local lockdowns

There's probably a lot more to add, but by simply identifying this first set of undesired outcomes, it's pretty apparent that data quality, underpinned by a reliable, repeatable process was critical.

Identifying threats and barriers

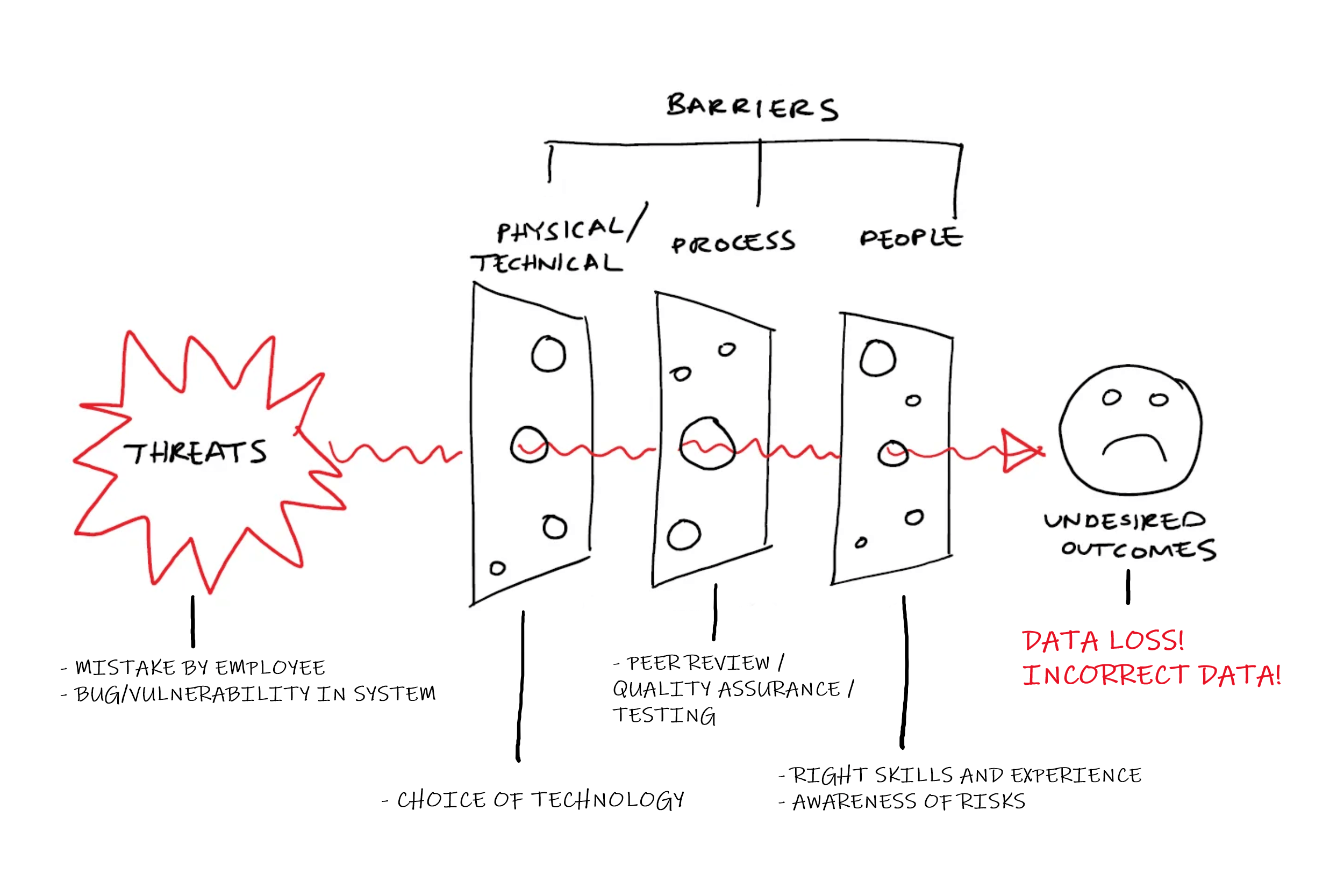

So, what next? Once you've identified what could go wrong, how do you reduce the chance of it happening? In order to successfully contain the risks or potential threats, a range of controls, or barriers, need to be put in place. These barriers typically fall into three broad categories:

- Technical (or physical) aspects inherent in the design and implementation

- Processes that are essential to managing risks such as regular maintenance, proactive monitoring and quality assurance

- People related attributes such as organisational design, training or communications

By now, some of you may already recognise the process I'm describing as our Swiss Cheese Model for risk and mitigation analysis. It was developed in partnership with one of endjin's customers to help them (successfully) get approval from the Financial Conduct Authority to move their data and workloads into Microsoft's Azure cloud platform. Their journey to the cloud started a number of years ago and, as early adopters, they had to build confidence among stakeholders, customers and regulators that they understood the risks involved, and had strategies in place to minimize and contain them.

We worked with the firm's board, the legal and compliance team, and technical leadership, to go through the process I'm describing to perform a comprehensive risk and mitigation analysis that allowed them to proceed with their eyes wide open. The barriers that they identified across technology, people and process, to prevent their undesired outcomes (around data breaches, poor performance and poor commercials) formed the basis of the policies and procedures that were woven into the cloud migration strategy across the organisation.

If you want to know more, we've published a series of more detailed articles around the process and how it was applied to cloud adoption, along with a freely downloadable poster that lists around 50 common barriers that will form a solid basis should you be embarking on a similar journey.

But, back to Public Health England. What barriers could/should have been put in place to prevent the undesired outcomes? Looking in from the outside, we only have a limited understanding of what happened, but the following diagram shows how what we know might map onto the Swiss Cheese Model.

When you start to look at the full picture, it becomes obvious that it wasn't Excel that caused the issues. As with any process, there were always going be threats to success. In this case, the two that become immediately apparent are simple human error, and bugs or vulnerabilities in the systems/applications that are being used. So, the technology, process and people put in place should have been designed to prevent those threats from happening.

To summarise what did happen:

- The choice of technology (Excel) was based on ease and speed of use, rather than ensuring quality of output

- A lack of quality assurance in the process meant that inaccuracies in the data would go unnoticed

- A lack of awareness of the risks and/or a lack of training and experience meant the overall process wasn't questioned

- Human error caused the wrong file format to be used, meaning data was truncated when importing from CSV

- The combination of the above resulted in incorrect data being presented to the public

It's worth highlighting again that the maximum row count within Excel wasn't a bug - it was a well documented limitation - but it certainly created a vulnerability that went unnoticed. The choice of the .XLS file format, instead of .XSLX meant that this vulnerability was massively increased, as the maximum row count was actually around 65,000, rather than over 1m as it is in more modern versions of Excel. Whether this was a deliberate choice (unlikely), or an accidental one, doesn't matter - the impact remains the same, and it could have easily been prevented had the right barriers been put in place.

Of course - the barriers are stronger when they work together, and weaker when they work against each other. Adding automated testing / quality assurance to an Excel workbook, for example, is definitely hard. As a "business user" tool, it focuses on enabling and empowering insights answers quickly, over and above enforcing a methodical and well-documented approach. But if the data is so critical (e.g. if people's lives are at stake) that an Excel "model" needs be version controlled, tested and updated using repeatable and reliable processes, that have built in quality gates, maybe it isn't the right tool for the job in the first place. And if it's your only option, then the other barriers (people and process) are your only line of defence.

When it all goes wrong

The process is called the "Swiss Cheese Model" as it recognises that each barrier/layer might contain holes. Having multiple layers of protection (technical, process and people) means the chances of the undersired outcomes happening is minimised, but it would be naive to think that it's impossible to penetrate all the layers, either deliberately or unexpectedly. So what happens when "all the holes line up"? The last step in the process is accounting for mitigation strategies, should the worst-case actually happen.

Going back to the cloud adoption example, various containment strategies can be put in place to deal with unplanned outages or data breaches. Most organisations have crisis communication plans, business continuity plans and disaster recovery plans which can be adapted and enhanced accordingly to deal with the new breed of exposure. Acknowledging that "bad things can happen" is most important step in figuring out what you'd need to do about it if they did.

But it seemed from the outside that this hadn't been a consideration for Public Health England. Depending on what you read, we've learned that the Test and Trace teams weren't informed about the missing data for around a week. And the public saw the additional figures appended to the daily counts published over a couple of days. Was the data truncation issue expected? No. Did they have a communication and mitigation process for dealing with incorrect figures being reported? It certainly doesn't appear so.

They have now reportedly "fixed" the problem by "breaking down the test result data into smaller batches to create a larger number of Excel templates" to avoid the row count limits. Looking back at the Swiss Cheese diagram above, it's concerning that none of the barriers have been strengthened. If anything, the process now has extra levels of complexity as files are now split and re-joined along the way. The technology risk hasn't disappeared, it's just changed shape, and without extra barriers, the same undesired outcomes are still a significant reality.

Summary

The recent high profile issues in the Test and Trace data programme could have been avoided if a comprehensive risk and mitigation analysis had been performed. This process can be done quite simply, as this post has described, by firstly identifying the undesired outcomes, and then putting in place the right set of barriers to minimise the chances of them happening.

In the Public Health England case, the threat of human error was realised, and there were no (or inadequate) barriers in technology, people or process to prevent a full breach, ultimately resulting in incorrect data being presented to the public and the Test and Trace teams.