Bye bye Azure Functions, Hello Azure Container Apps: Introduction

TLDR; Azure Functions are a good choice for asynchronous and reactive workloads, but for building HTTP APIs they have significant drawbacks, most notably cold start times. Azure Container Apps provide an alternative platform for hosting containerised workloads, with lower costs, particularly when your containers are idle.

The promise and reality of Azure Functions

When Azure Functions first came on the scene a few years ago, they sounded almost too good to be true. Consumption based pricing with automatic scale-out to a huge number of instances? Yes please! The ability to trigger Functions from HTTP requests, queue messages, events from event grid, or on a Cron schedule? Absolutely!

For scenarios such as asynchronous message processing (or tasks that are similarly reactive in nature), Azure Functions are an excellent choice. However, what we've learned from experience is that they aren't the panacea we'd hoped for to build truly serverless HTTP APIs.

The biggest problem we've seen when building HTTP APIs using Azure Functions on the consumption plan is the cold start time; in short, it can be pretty horrible. This isn't necessarily a problem if your Function is, for example, processing messages from a queue. But if you're building an HTTP API then it can be a significant issue, as the first call to your endpoint will have someone on the other end of it, waiting for a response.

This is exacerbated when we're building more complex HTTP APIs, as there are several components of startup - platform, runtime and application. In simple message handling scenarios, it's likely that your code doesn't need a huge amount of setup. But in more complicated APIs, you may need to do a number of things - configuration of DI containers, accessing services such as Key Vault, and so on - which all adds to that startup time.

We also found that under any kind of load, scale out happens much faster than we originally expected, so that cold start up time can impact more than just the first request. Our big problem is that if you have a microservices-based architecture where an incoming request can involve making calls to multiple other services, you can incur that cold start penalty multiple times for a single user request to your API. And when there's a user waiting on the other end for a response, this results in a particularly poor experience. 7 There are a couple of ways this can be addressed if you're set on hosting your API in Azure Functions. One option is the Azure Functions Premium plan. This allows you to keep perpetually warm instances of your Functions, meaning that you don't incur the cold start penalty as frequently. You can host multiple function apps inside a single premium plan to get more bang for your buck. Pricing is still based on consumption, but because you're keeping things "always on" then you incur a minimum spend of over £100/month - you can see the pricing here.

The other way is to use a Dedicated plan, which just means you run your Functions in an App Service plan, priced at the normal app service plan rates. You can read more about this here.

These are reasonable options for some scenarios, but is there a way of getting closer to what we'd originally hoped Azure Functions would give us? Specifically, a way of hosting our APIs that can scale down to the point where it's costing us next-to-nothing, but still scale up on demand without incurring painful cold start times?

Introducing Azure Container Apps

Azure Container App went GA in May 2022 and provides a platform for hosting containerised applications without needing to manage complex infrastructure such as Kubernetes clusters (which has significant overhead even when using something like Azure Kubernetes Service).

It has some immediately obvious advantages - containerisation means that you can host pretty much anything in the service - microservices, background services, web applications, etc. You can build those things in whatever language you like - as long as you can build it into a Linux container, you can run it in ACA.

Note: For a good introduction to containerisation and Docker, see my fellow endjineer Liam's well-named post Introduction to Containers and Docker.

Like Azure Functions, ACA pricing is consumption-based and you have the option for your containers to scale down to zero if needed. Obviously this wouldn't help with the cold start issues we've covered above, but it's also possible to set a minimum number of replicas for your container, which would have much the same effect as the Premium Functions plan mentioned above. However, there's a potential advantage over the Premium Functions Plan; the notion of containers becoming inactive - meaning they are still provisioned but idle - in which state the cost is significantly lower.

Cost Modelling Azure Container Apps

The pricing for ACA is not the easiest to understand. You can see it here. Here's my attempt to summarise it:

- There are two "modes" for a container; "Active" and "Inactive". Pricing is different for each mode.

- Your container is "Active" when it's doing work - starting up, processing a request, etc. There are thresholds for vCPU and bandwidth usage above which your container is considered Active.

- At other times, your container is "Inactive".

- You are billed based on the amount vCPU of cores and Memory you specify when you provision the container app.

- The charge for memory usage is constant, regardless of the container's mode

- The charge for CPU usage is ~8 times lower when your container is inactive.

- You're also billed based on incoming requests at the ridiculously low amount of £0.33 per million requests

- There's a monthly free grant of

- 180,000 vCPU-seconds (50 hours)

- 360,000 GiB-seconds (100 hours)

The difference between active and inactive modes is particularly interesting because it should allow us to keep hot instances of our containers available for a relatively small amount of money.

We ran a small proof-of-concept to deploy one of our existing Functions-based APIs into Container Apps so we could see what kind of performance and cost characteristics we'd get. This was encouraging; response time was much faster when we had an idle instance of the app provisioned. However, the cold start problem is still significant if the app is allowed to scale all the way down to 0 running instances. This suggests that we'll want to ensure we've always got a single container instance provisioned, which means we need to determine if idle pricing works as we expected.

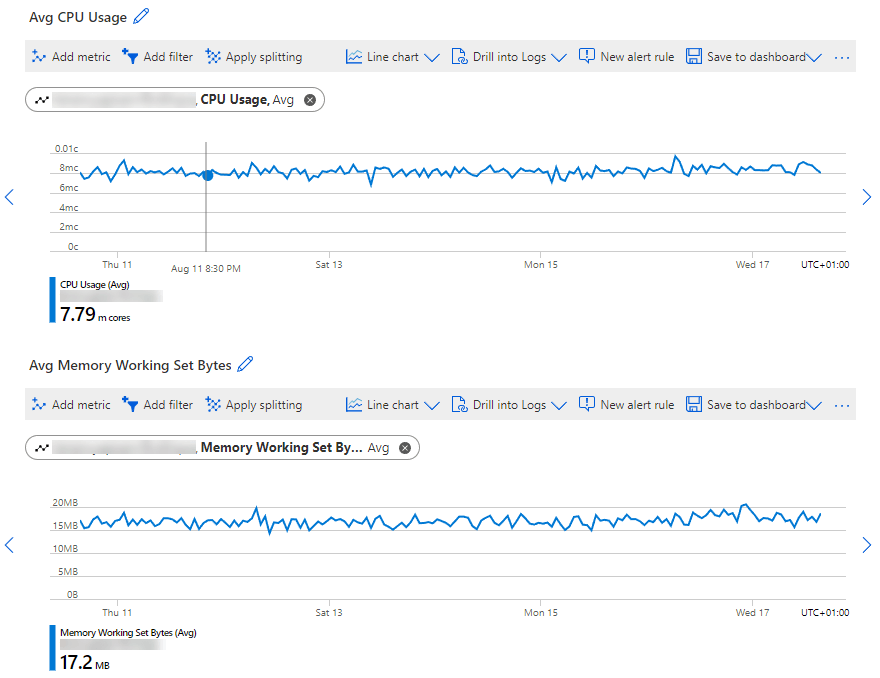

Here's a shot of CPU usage and memory consumption for our POC container app, which has been idle recently:

As you can see, average CPU usage over the last 7 days is around 8mc (1mc == 1 milli-core, i.e. 0.001 cores), and average memory working set is around 17MiB. This puts us firmly in the "idle usage price" category. The container app is provisioned with 0.5 vCPU and 1GiB RAM (the minimum is 0.25 vCPU and 0.1 GiB RAM).

We provisioned our container app with 0.5 vCPU cores and 1 GiB of memory. Given this we can produce a rough estimate for cost per month for a fully idle container app deployed into North Europe:

vCPU Cost = [Provisioned CPU] * [Idle usage price per second] * 60 * 60 * 24 * 30

= 0.5 * £0.0000025 * 60 * 60 * 24 * 30

= £3.24

Memory Cost = [Provisioned GiB] * [Idle usage price per second] * 60 * 60 * 24 * 30

= 1 * £0.0000025 * 60 * 60 * 24 * 30

= £6.48

Total fully idle cost = £9.72/month

We can do a similar calculation based on active pricing for a fully active container. Active pricing for vCPU is 8x idle pricing, but the memory cost is the same:

vCPU Cost = [Provisioned CPU] * [Idle usage price per second] * 60 * 60 * 24 * 30

= 0.5 * £0.0000200 * 60 * 60 * 24 * 30

= £25.92

Memory Cost = [Provisioned GiB] * [Idle usage price per second] * 60 * 60 * 24 * 30

= 1 * £0.0000025 * 60 * 60 * 24 * 30

= £6.48

Total fully active cost = £32.40/month

The cheapest Premium Functions Plan is $152.31/month (around £127 at time of writing). This gives you 210 ACU and 3.5 GiB memory.

ACU stands for "Azure Compute Unit", a metric created to provide a way of comparing compute performance over different Azure SKUs. You can read more about it here. Reviewing the documentation on the Premium plan instance SKUs suggests that this maps to 1 core.

This means we're not exactly comparing apples to apples, since our premium plan would have more resource dedicated to the Function Apps it hosts than we're allocating to our container app in the calculations above. But if you don't use it, it's waste.

So, does this mean ACA is better? As always, the answer is "it depends". Specifically, it depends on how many container apps you want to run, how much resource you'll need to allocate to each, and your requirements around standby instances. So let's have a look at one of our internal applications which currently has its APIs hosted in Azure Functions.

The Application to Migrate

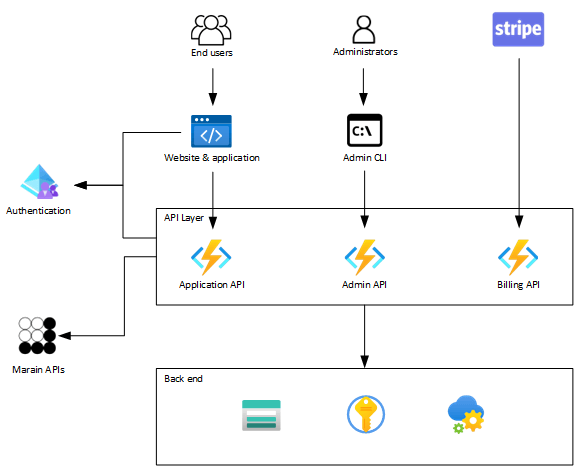

Our existing application, Career Canvas, has the following characteristics:

- SPA web application (using Vue.js)

- Three APIs:

- The API that's used by the front end

- An API for integration with our billing platform, Stripe

- An Admin API, primarily consumed by a CLI tool

- All back-end code written in C# using .NET 6.0

- All data stored as blobs in Azure Storage

- APIs currently hosted in Azure Functions (using our OpenAPI specification-first Menes framework)

- Front end hosted in Azure Static Websites

- Also uses our Marain services to provide cross cutting concerns such as multi-tenancy and claims.

We also have automated build and deployment scripts run in Azure DevOps:

- Separate CI builds for the back-end and front-end code. The CI builds publish a zip file containing the compiled/published code ready for deployment.

- Separate deployment pipelines for the back-end and front-end code

Local dev for the back end is currently done using Visual Studio; for the front end our standard approach is to use Visual Studio Code with development taking place inside a dev container.

We have three APIs to migrate; if we assume they can be deployed with the same resource profile as our test application then that gives us a cost of between around £30/month (fully idle) and £100/month (fully active). However, we've also determined that only two of those APIs needs to be "always on", so in fact the cost is more likely to be somewhere between £20/month and £65/month.

So purely from a pricing perspective, moving our application to hosting in Azure Container apps will be more cost-effective than using an Azure Functions Premium Plan, or an App Service. As mentioned above, this may prove to not be the case for applications with more APIs or heavier resource requirements - it will all depend on exactly on your architecture and non-functional requirements.

However for our use case, the next step was to take our application and move its HTTP APIs into ACA.

The Migration Plan

As we saw it at the start of the process, the plan was:

- Migrate the existing Function Apps to run as standard ASP.NET Core .NET 6.0 apps.

- Update our existing back-end build and deployment pipelines to output, consume and deploy container images instead of zipped-up code.

- Remove the function apps from our existing test environment and redeploy the container apps, leaving other components of the environment (storage, App Config, Key Vault, etc) in place.

Once we'd done this, we also planned to put some of the learnings from James Dawson's Adventures in Dapr to good use.

As always, things weren't quite that smooth. Over the next few posts, I'll cover some of the gotchas involved in the process and the end state that we reached.